AI’s Next Frontier: What to Expect at NVIDIA GTC 2025

The technological world’s attention is laser-focused on San Jose as NVIDIA prepares to host its flagship GPU Technology Conference (GTC) from March 17-21, 2025. Widely regarded as the Olympics of AI innovation, this year’s gathering promises seismic announcements poised to reshape the technological landscape well into the future. With 25,000 attendees converging in person and an estimated 300,000 virtual participants, GTC 2025 will effectively become the command centre of global AI advancement, featuring over 1,000 sessions led by 2,000 speakers and nearly 400 exhibitors unveiling cutting-edge technologies1.

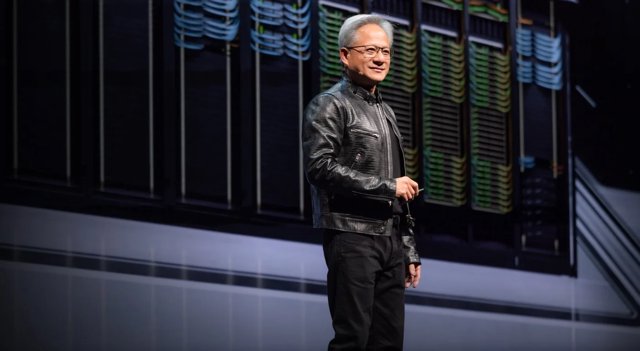

As the driving force behind much of the AI revolution through its hardware dominance, NVIDIA’s announcements carry extraordinary weight for everyone from developers to market analysts. CEO Jensen Huang’s highly anticipated keynote, scheduled for Tuesday, March 18, will undoubtedly set the conference’s tone and likely unveil innovations that could define the next chapter in computing history.

The Main Attractions: What’s on the Horizon

Next-Generation GPU Architecture

The centrepiece will undoubtedly be NVIDIA’s newest GPU technologies. Industry insiders anticipate significant revelations about the Blackwell B300 series, codenamed Blackwell Ultra, which Huang previously confirmed for release in the second half of this year. These powerhouse chips are rumoured to deliver dramatically enhanced computing performance while boasting an impressive 288GB of memory—a critical advancement for training and deploying increasingly data-hungry AI models2.

But the revelations won’t stop there—attendees should brace themselves for details about NVIDIA’s future Rubin GPU series, slated for 2026, which Huang has characterised as a “big, big, huge step up” in computational capacity. Whispers even suggest the keynote might offer tantalising glimpses of post-Rubin developments, potentially unveiling NVIDIA’s long-term GPU roadmap.

Physical AI and Robotics

Robotics is expected to take centre stage at this year’s GTC, as the boundaries between virtual AI and physical implementation continue to dissolve. NVIDIA has methodically strengthened its robotics platform, and GTC 2025 could showcase how their computational technologies are enabling increasingly sophisticated autonomous systems spanning industrial, consumer, and specialised applications.

The marriage of AI with physical systems represents one of the most captivating technological frontiers, potentially demonstrating how neural networks trained in virtual environments can seamlessly transfer to real-world applications with unprecedented precision and reliability.

Sovereign AI: Computing Independence

As geopolitical tensions reshape the global technological landscape, “sovereign AI” has emerged as a critical strategic concern for nations and enterprises alike. This concept—centred on developing AI capabilities that can function independently within specific jurisdictions without relying on foreign infrastructure or data—is likely to receive substantial attention at GTC 2025.

NVIDIA’s approach to enabling sovereign AI infrastructure could fundamentally define how countries develop their indigenous AI ecosystems in an increasingly fragmented global technological environment. Expect detailed discussions about specialised hardware configurations, localised data centres, and frameworks designed to address varying regulatory requirements across different regions.

The Edge Computing Revolution

AI Decentralisation

One of the most profound shifts in AI implementation is the movement toward decentralised computing, pushing intelligence closer to where data originates. This trend is particularly crucial for applications demanding real-time decision-making, such as autonomous vehicles, industrial automation, and smart city infrastructure.

NVIDIA’s Jetson modules, which integrate GPU technology into compact compute modules, have become cornerstone technologies for edge AI development. At GTC 2025, we can expect announcements about new Jetson variants or enhancements delivering greater computational power while maintaining energy efficiency—a delicate balance critical for edge deployment.

Rugged Edge Computing: Specialised computing hardware engineered to operate reliably in hostile environments characterised by extreme temperatures, vibration, dust, moisture, or unstable power conditions. These robust systems enable AI deployment in industrial, outdoor, and mission-critical settings where standard hardware would inevitably fail.

Quantum Computing: The Next Computing Paradigm

Quantum Day Takes Centre Stage

A dedicated “Quantum Day” scheduled for March 20 signals NVIDIA’s growing commitment to quantum computing technologies3. While Huang famously stated at CES that true quantum computing remains “decades away,” NVIDIA clearly recognises the strategic value in positioning itself within this emerging field.

The sessions will likely explore how NVIDIA’s classical computing architecture can complement quantum approaches through sophisticated simulation and hybrid models that harness the strengths of both paradigms. Industry watchers should pay careful attention to potential partnerships or toolkits that bridge traditional GPU computing with quantum research initiatives.

Industry Context: Challenges and Opportunities

Overcoming Technical Hurdles

The path to next-generation AI hasn’t been entirely smooth for NVIDIA. Reports indicate that early Blackwell cards suffered from severe overheating issues, prompting some customers to scale back their orders. How NVIDIA addresses these engineering challenges—and whether the company has implemented effective solutions—will be meticulously scrutinised during GTC presentations and demonstrations.

Navigating Geopolitical Headwinds

U.S. export controls and tariff concerns have significantly impacted NVIDIA’s stock performance in recent months, creating market uncertainty. The company’s strategy for navigating these restrictions while maintaining global market leadership will likely influence announcements about product availability, manufacturing partnerships, and regional deployment strategies.

Competition from Efficient AI Models

The rise of Chinese AI lab DeepSeek, which has developed remarkably efficient models that rival those from leading AI labs, has raised questions about future demand for NVIDIA’s high-powered GPUs. Huang has countered that such developments actually benefit NVIDIA by accelerating broader AI adoption, but the company’s positioning relative to these efficiency trends bears close monitoring.

Power-Hungry Reasoning Models

As AI evolves toward more sophisticated reasoning capabilities, exemplified by models like OpenAI’s o1, computational demands continue to escalate dramatically. NVIDIA appears poised to embrace this challenge, with Huang identifying these advanced models as “NVIDIA’s next mountain to climb”. GTC presentations will likely highlight how the company’s hardware roadmap aligns with these emerging AI architectures.

The Future Takes Shape

GTC 2025 arrives at a pivotal moment for AI technology. The initial wave of generative AI has transformed how we conceptualise machine capabilities, but the more challenging work of embedding these technologies into physical systems, critical infrastructure, and scientific research is just beginning.

As NVIDIA continues to push the boundaries of what’s computationally possible, GTC offers a unique window into not just the company’s strategic direction, but the technological trajectory for the entire industry. Whether you’re a developer, researcher, investor, or technology enthusiast, the announcements and discussions at this year’s conference will fundamentally shape our understanding of AI’s next chapter.

For those unable to attend in person, NVIDIA will livestream Huang’s keynote address and numerous sessions online, making this glimpse into the future accessible worldwide. The company has even planned a special pre-keynote show hosted by the “Acquired” podcast to build anticipation before Huang takes the stage.

In an industry where yesterday’s science fiction routinely becomes tomorrow’s commonplace technology, GTC 2025 promises to once again accelerate the timeline from imagination to implementation.

FAQ: NVIDIA GTC 2025

What makes GTC 2025 particularly significant compared to previous years?

GTC 2025 arrives at a critical inflection point for AI development, with the industry transitioning from the initial generative AI boom toward more sophisticated applications in physical systems, reasoning models, and scientific computing. With mounting challenges around chip performance, geopolitical restrictions, and emerging competitors, NVIDIA’s announcements this year could profoundly influence the direction of AI development amid a rapidly evolving technological landscape.

Will the announcements at GTC 2025 primarily benefit AI researchers or have broader impacts?

While researchers will undoubtedly benefit from advancements in GPU architecture and AI frameworks, GTC 2025’s focus on edge computing, physical AI, and domain-specific solutions suggests far-reaching implications across diverse industries. Announcements are likely to impact automotive development, manufacturing, robotics, healthcare, and consumer electronics, making this year’s conference relevant to a much broader audience than just the research community.

How might NVIDIA address the efficiency challenges posed by emerging AI models?

NVIDIA will likely present a sophisticated two-pronged approach: delivering significantly more raw computing power through next-generation architectures like Blackwell Ultra and Rubin, while simultaneously introducing sophisticated software optimisations that dramatically improve efficiency. The company may also highlight specialised configurations for different AI workloads, acknowledging that the one-size-fits-all approach to AI computing is giving way to more tailored solutions for specific applications.

What should investors and industry watchers look for beyond the flashy product announcements?

Beyond new GPU reveals, pay attention to NVIDIA’s strategy for navigating complex export controls, its strategic partnerships with system integrators and cloud providers, and how it positions itself relative to specialised AI chips from increasingly capable competitors. The company’s approach to quantum computing initiatives, despite Huang’s caution about timeframes, may also provide valuable insight into its long-term diversification strategy beyond traditional GPU development.

Jargon Explained

Sovereign AI: The development of AI technologies, infrastructure, and data pipelines that can operate independently within specific national or regulatory boundaries, reducing dependence on foreign technologies or platforms while maintaining control over sensitive data and computing resources.

Edge Computing: A distributed computing paradigm that brings computation and data storage closer to the location where it’s needed. Unlike cloud computing, which centralises resources in distant data centres, edge computing processes data locally on devices or nearby servers, reducing latency and bandwidth use while improving reliability and privacy.

Parallel Computing: A type of computation where many calculations or processes are carried out simultaneously. NVIDIA’s GPUs excel at this approach, using thousands of smaller, more efficient cores to process multiple data points concurrently—making them ideal for AI workloads that involve massive datasets.

Rugged Edge Computing: Specialised computing hardware designed to operate reliably in harsh environments characterised by extreme temperatures, vibration, dust, moisture, or unstable power conditions. These systems enable AI deployment in industrial, outdoor, and mission-critical settings where standard hardware would fail.