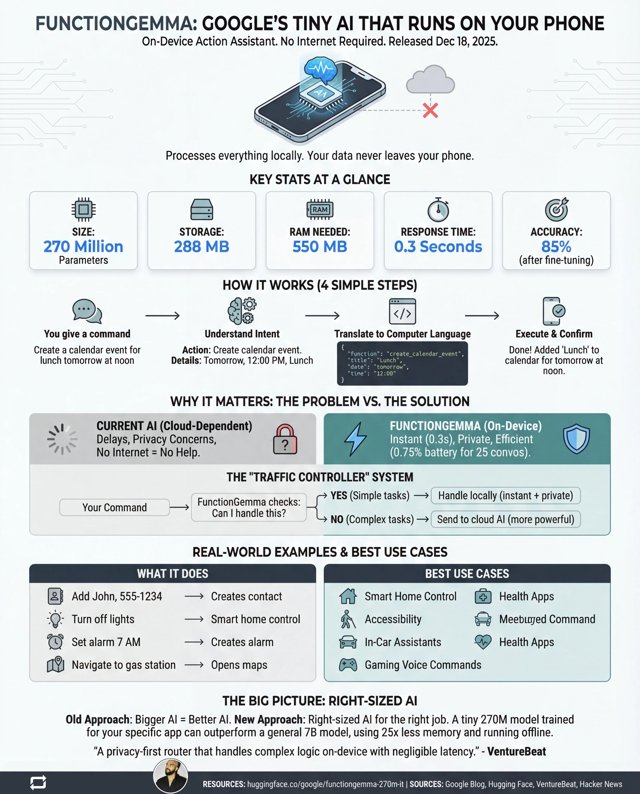

On 18 December 2025, Google quietly released FunctionGemma, a tiny 270-million parameter AI model designed to run entirely on-device. The press release focused on smartphones—setting reminders, toggling torches, the usual digital assistant fare. But buried in those specs is something far more interesting: a blueprint for making robots dramatically cheaper.

Here’s the dirty secret of today’s “smart” robots: most of them aren’t smart at all. They’re terminals. The intelligence lives in some data centre thousands of kilometres away, connected by an internet link that adds latency, costs money, and fails the moment you walk into a dead zone. Every time your warehouse robot needs to make a decision, it’s ringing home like a nervous teenager asking permission.

FunctionGemma changes the maths entirely.

The Numbers That Matter

Let’s skip the marketing waffle and look at what actually matters for robotics:

- 288 MB storage footprint – Fits on the cheapest microcontrollers

- 550 MB RAM – A Raspberry Pi 4 has 8 GB to spare

- 0.3 second response time – On-device, no network round-trip

- 58% accuracy baseline → 85% after fine-tuning – Trainable for specific tasks

That last point is crucial. FunctionGemma isn’t meant to be a general-purpose chatbot. It’s designed to be fine-tuned for narrow, specific tasks—exactly what robots do. A warehouse robot doesn’t need to discuss philosophy. It needs to understand “pick up box A, move to shelf B” and execute flawlessly, thousands of times a day.

Why Cloud-Dependent Robots Are a Dead End

The current paradigm for “intelligent” robots is fundamentally broken. Consider what happens when your robot needs to make a decision:

- Sensor data captured

- Data compressed and sent to cloud

- Cloud server processes request

- Response sent back

- Robot acts

That’s five steps with multiple failure points. Network congestion? Robot freezes. Server overloaded? Robot waits. Internet outage? Robot becomes an expensive paperweight. And you’re paying for every millisecond of compute time and every megabyte of data transfer.

For a single household vacuum, this might be tolerable. For a fleet of 500 warehouse robots running round the clock? The cloud bills alone could bankrupt you, and the latency makes real-time coordination nearly impossible.

The Edge Computing Revolution Hits Robotics

FunctionGemma represents a philosophical shift: instead of asking “how do we make robots smart enough to need the cloud,” Google is asking “how do we make the cloud small enough to fit in a robot.”

This isn’t unprecedented. The automotive industry figured this out years ago—your car’s automatic emergency braking doesn’t ring Google before deciding to stop. The decision happens locally, in milliseconds, because latency kills (literally). But until now, the AI models capable of understanding natural language commands and translating them into actions were too massive for edge deployment.

What Cheap Robotics Looks Like

Imagine a £150 home assistant robot with:

- Full natural language understanding for common commands

- No monthly subscription fees

- Works perfectly during internet outages

- Your voice data never leaves the device

- Instant response to commands

Or picture agricultural robots that can operate in fields with zero mobile coverage. Disaster response drones that don’t need Starlink to function. Elderly care companions that don’t require a cloud subscription to remind someone to take their medication.

The cost savings compound at every level. Cheaper compute hardware means cheaper robots. No cloud dependency means no recurring fees. Local processing means simpler networking requirements. Privacy by design means easier regulatory approval.

The “Traffic Controller” Architecture

Google isn’t naïve enough to claim FunctionGemma can replace large language models entirely. Their proposed architecture is smarter: use FunctionGemma as a local “traffic controller” that handles the 90% of simple commands immediately, and only routes complex queries to the cloud when necessary.

For a robot, this might look like:

- Handled locally: “Move forward,” “Stop,” “Pick up the red object,” “Return to charging station”

- Routed to cloud: “Analyse this unusual object and tell me what it is,” “Plan an optimal route through this new environment”

This hybrid approach gives you the speed and reliability of edge computing for routine operations, whilst preserving access to cloud-scale intelligence for genuine edge cases.

The Fine-Tuning Factor

Perhaps the most important aspect for robotics is FunctionGemma’s trainability. The baseline 58% accuracy sounds terrible—and it is, for a general-purpose assistant. But fine-tuned on a specific vocabulary of robot commands and actions, it jumps to 85%.

Now imagine what happens when a robotics company fine-tunes it specifically for their use case:

- Warehouse picking robot: 50 core commands, optimised vocabulary, accuracy potentially above 95%

- Delivery drone: navigation commands, safety overrides, weather responses

- Manufacturing arm: precise movement instructions, quality control checks

Each robot type gets a bespoke AI brain, perfectly sized for its needs and trained on exactly the vocabulary it will encounter. This is the opposite of the “one giant model to rule them all” approach—it’s modular, efficient, and deployable.

The Implications for Robot Manufacturers

For companies building robots, FunctionGemma represents a strategic inflection point:

Cost structure changes: The bill of materials for a “smart” robot could drop by hundreds of pounds when you don’t need expensive networking hardware and cloud connectivity redundancy.

Subscription model dies: Robot-as-a-Service depends on cloud dependency to lock customers into recurring payments. Local AI breaks that model—and customers will notice.

Reliability becomes achievable: A robot that can function autonomously means guaranteed uptime without heroic networking infrastructure.

Privacy becomes a feature: Data that never leaves the device can’t be breached, leaked, or subpoenaed.

What’s Missing

Let’s not oversell this. FunctionGemma has real limitations:

- No multi-step reasoning: “Pick up the box, check the label, and put it in the right bin” is currently beyond its abilities

- Indirect commands struggle: “The room is too bright” won’t trigger a light adjustment

- 15% error rate: Fine for many applications, dangerous for others

But these are software problems with known solutions. Multi-step reasoning is what chain-of-thought prompting is for. Indirect commands can be handled by fine-tuning on paraphrases. Error rates will drop with larger training datasets and model iterations.

The hardware constraints—that’s the hard problem. And Google just proved that 270 million parameters is enough for practical function calling. That’s the breakthrough.

The Bigger Picture

FunctionGemma isn’t going to single-handedly create the robot revolution. But it’s a proof of concept that the AI industry desperately needed: you don’t need a trillion-parameter model to make machines useful. You need the right-sized model for the right job.

The implications extend beyond robotics into IoT, wearables, medical devices, and anything else that needs to make decisions without ringing home. But for robotics specifically, this feels like the moment the industry has been waiting for—the moment when “smart robot” stops requiring “expensive robot.”

The future of affordable robotics isn’t in the cloud. It’s in 288 megabytes of carefully trained weights, running locally, responding instantly, working everywhere. Google just gave us a glimpse of what that looks like. Now it’s up to the robot makers to build it.