The dirty little secret of modern robotics? Most of those jaw-dropping demos you see are little more than high-tech puppet shows. We’re talking an entire platoon of human operators, kitted out in complex, eye-wateringly expensive teleoperation rigs, remotely guiding a robot’s every single twitch. All of it, just to generate the data needed to teach the poor bot anything remotely useful. It’s a glacial, bank-breaking, and frankly, utterly unscalable process. Enter Tony Zhao and Cheng Chi, Stanford PhD dropouts and the brains behind Sunday AI, who took one look at this “scaling deadlock” and decided to skip the whole faff entirely.

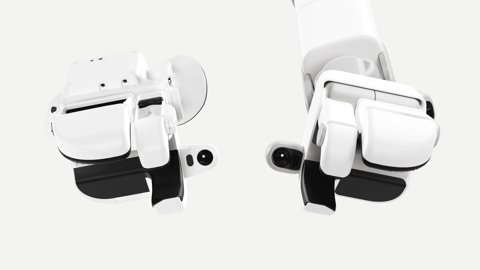

Their solution, the secret sauce powering a spanking new foundation model dubbed ACT-1, is deceptively simple, yet utterly brilliant: if you want a robot to master a task, just blooming well do it yourself. But instead of a £16,000 teleop rig (that’s roughly $20,000 for our American chums), Sunday’s ingenious engineers are wielding a £160 “Skill Capture Glove.” This glove, meticulously co-designed to mirror the precise geometry and sensor layout of their Memo robot’s hand, hoovers up the subtle, contact-rich data of human motion. The premise is audacious, really: if a human can pull it off while wearing the glove, the robot can learn it. No more digital strings attached, no more robotic puppetry required.

The Data Bottleneck and the Glove Solution

Sunday’s core belief is that the robotics revolution isn’t being held back by a lack of shiny hardware, raw compute power, or even funding. Oh no, the real villain in this piece is a single, definitive constraint: data. While Large Language Models can gorge themselves on the entire internet, robotics has no such vast corpus of real-world interaction data to chew on. Companies like Tesla can leverage millions of cars for data collection, but let’s be honest, robotics startups don’t have that kind of luxury. Teleoperation was the industry’s rather clunky answer, but it’s a brute-force approach that’s both capital-intensive and moves at a snail’s pace.

The Skill Capture Glove is Sunday’s elegant end-run around this rather sticky wicket. By decentralising data collection, anyone, anywhere, can contribute to the training set without needing a physical robot on-site. This delivers two rather smashing advantages:

- Capital Efficiency: Sunday boldly claims the glove is two orders of magnitude cheaper than a standard teleop setup, dramatically slashing the cost of data acquisition.

- Data Quality: For those tricky tasks that rely on feel – like figuring out the perfect force needed to fold a sock or gently seat a wine glass in a dishwasher rack – the glove provides natural force feedback that remote teleoperation simply can’t replicate. It’s like the difference between actually holding a cup of tea and being told what it feels like.

This clever approach allows Sunday to capture data from hundreds of gloriously messy, real-world homes, meticulously building a dataset that truly reflects the “long tail of living,” as they brilliantly put it – yes, that includes cats in dishwashers and all the glorious chaos in between.

From the Dining Table to the Dishwasher

To truly prove ACT-1’s mettle, Sunday showcased what they’re calling “the most complex task ever done by a robot autonomously”: clearing a dinner table and loading a dishwasher. Now, this isn’t just a bit of simple pick-and-place. Oh no. This Herculean task involves 33 unique and 68 total dexterous interactions with 21 different objects – from delicate, transparent wine glasses that would make your granny wince, to robust ceramic plates and fiddly metal utensils.

Throughout this marathon, long-horizon task, the Memo robot navigates over 130 feet (roughly 40 metres for our metric-minded friends), efficiently dumps food waste, and even operates the dishwasher itself. It’s a veritable symphony of fine-grained manipulation and room-scale navigation, all controlled by a single, end-to-end model. Co-founder Tony Zhao openly admits they “shattered plenty of glasses” during development (a rite of passage, perhaps?), but remarkably managed zero breakages over more than 20 live demos. A proper testament, that, to the model’s learned sensitivity.

Zero-Shot Generalization in the Wild

A robot that only works in its own lab is, let’s be frank, just a glorified science project. To truly prove ACT-1’s adaptability, the team bravely deployed Memo in six utterly unfamiliar Airbnbs. The mission: clear the table and load the dishwasher with absolutely zero environment-specific training. No faffing about with new maps or bespoke programming.

By conditioning the model on 3D maps during training, ACT-1 learns to interpret new layouts rather than simply memorising specific ones. When plonked into a new house, it uses the provided map to navigate to key locations, demonstrating a crucial capability for any robot truly intended for the beautiful chaos of a real home. To date, ACT-1 is the first foundation model to combine this level of long-horizon manipulation with map-conditioned navigation. That’s pretty smashing, if you ask me.

Pushing the Frontiers of Dexterity

Beyond the marathon dishwasher task, Sunday is also showing off ACT-1’s rather impressive finesse with two notoriously difficult challenges: folding socks and pulling an espresso shot. While other robots have managed to fold large, predictable items (your grandad’s blanket, perhaps), socks are an absolute nightmare of deformability and self-occlusion. Yet, ACT-1 successfully identifies pairs from a cluttered pile, deftly balls them using multi-finger movements, and deposits them in a basket. Quite chuffed with itself, I imagine.

Operating an espresso machine, meanwhile, demonstrates a rather cunning combination of millimeter-level precision and brute force. The robot performs a mid-air tamp, inserts the portafilter (no easy feat!), and generates the high torque needed to lock it in before pressing the button. These aren’t just flashy demos designed to make you say “ooh” and “aah”; they’re carefully chosen proofs of the high-quality, nuanced data the Skill Capture Glove can provide. It’s all about the data, darling.

Sunday’s approach is a bold gamble, a proper roll of the dice. By betting everything on a novel data collection method, it has bypassed the industry’s biggest bottleneck and produced a model with startling capabilities. The wheeled Memo robot may not have the sci-fi appeal of a bipedal humanoid that could one day bring you a cuppa, but its practical intelligence is utterly undeniable. Sunday has quietly, yet definitively, thrown down a gauntlet, suggesting that the future of robotics may not be built by an army of puppeteers, but by simply showing a robot how it’s done. And frankly, that’s brilliant.