Let’s be frank: when you think of Microsoft, you likely picture the software powering the world’s spreadsheets and desktops, not the robots that might one day build them. The company’s track record in robotics has been, shall we say, a little patchy. Many of us still have a dusty corner of our memory reserved for the Microsoft Robotics Developer Studio, a mid-2000s attempt to create a “Windows for robots” that eventually went the way of the Dodo. It was a valiant effort, but ultimately a platform looking for a problem that the market wasn’t quite ready to solve.

But this is 2026, and the landscape has shifted beneath our feet. Microsoft, now supercharged by its formidable alliance with OpenAI, has evolved from a software giant into an undisputed AI behemoth. And it’s taking another, far more sophisticated swing at the robotics crown. This time, it’s not just about tossing a developer kit into the wild. It’s about crafting a single, universal brain—a foundation model for the physical world capable of powering everything from a multi-jointed factory arm to a humanoid butler. The goal is to finally crack the code of “embodied AI”—bridging the chasm between digital reasoning and physical movement.

From Language Models to ‘Physical AI’

For decades, robots have been masterfully efficient—provided they stay in their lane. An automotive assembly line is a roboticist’s paradise: every bolt is exactly where it should be, every movement is rehearsed to the millimetre, and the margin for error is non-existent. However, the moment you drag that robot out of its safety cage and drop it into the messy, unpredictable human world, it becomes little more than a very expensive paperweight. This is the wall Microsoft is determined to tear down.

The company’s grand vision revolves around what it calls “Physical AI,” applying the same “scale-up” logic that made models like GPT-4 so transformative. The spearhead of this movement is Rho-alpha, Microsoft’s flagship robotics model derived from its “Phi” series of vision-language models. As Ashley Llorens, VP at Microsoft Research, puts it, the aim is to create systems that can “perceive, reason, and act with increasing autonomy alongside humans in environments that are far less structured.”

In plain English, they want a robot that doesn’t just follow the command “pick up the mug,” but understands the physics of porcelain, the common-sense logic that you shouldn’t drop it, and the spatial awareness to adjust if the mug is slightly off-centre. It’s a shift from brittle, hard-coded instructions to a fluid, intuitive intelligence.

The VLA+ Advantage: A Delicate Touch

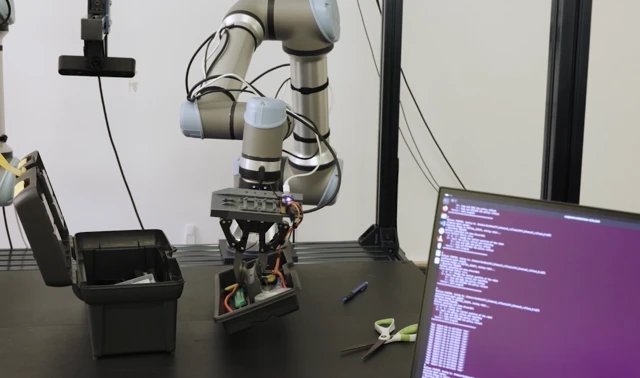

The “secret sauce” behind Rho-alpha lies in its architecture, which Microsoft has dubbed a Vision-Language-Action Plus (VLA+) model. While competitors like Google DeepMind have focused heavily on vision and language (VLA), Microsoft is adding a third, vital sense: touch. By integrating tactile sensing, the model can grasp the nuances of contact—performing delicate tasks like plugging in a cable or turning a stiff dial—that are notoriously difficult to master using cameras alone.

Of course, training such a beast presents a massive hurdle: a chronic shortage of high-quality data. You can’t simply scrape the internet for billions of videos of a robot successfully using a screwdriver. To bypass this, Microsoft is leaning heavily into the “digital twin” approach.

“Training foundation models that can reason and act requires overcoming the scarcity of diverse, real-world data,” explains Deepu Talla, Vice President of Robotics and Edge AI at NVIDIA. “By leveraging NVIDIA Isaac Sim on Azure to generate physically accurate synthetic datasets, Microsoft Research is accelerating the development of versatile models like Rho-alpha.”

This blend of synthetic data—dreamed up in high-fidelity simulations—and real-world demonstrations is the key to training these models at scale. If the robot fumbles, a human operator can step in with a 3D mouse to correct the movement, and the system learns from that “intervention” in real-time.

An Operating System for the Robotic Age

Should Microsoft pull this off, the ramifications are staggering. A general-purpose robotics model could act as a cloud-based operating system for hardware. Instead of every start-up spending years building their own AI stack from scratch, they could simply licence a world-class foundation model from Microsoft and focus on building better hardware. It would lower the barrier to entry so significantly that we could see a “Cambrian explosion” of new robotic forms and niches.

Naturally, Microsoft isn’t the only one with this ambition. NVIDIA, with its Project GR00T, is building a similar ecosystem by marrying its hardware dominance with the Omniverse simulation platform. Tesla is taking the “vertical” route with Optimus, betting that the data from millions of cars on the road will give it the edge in understanding the physical world. And Google remains a formidable research titan in the field.

Microsoft’s play, however, is classic Microsoft: the platform. By offering Rho-alpha through an early access programme and eventually via Microsoft Foundry, it is inviting the world to build on its foundations. This collaborative strategy, backed by the sheer horsepower of the Azure cloud, is a formidable hand to play.

The dream of a truly “general-purpose” robot is still a few miles down the road. The hurdles of real-world physics, safety protocols, and unit costs remain daunting. But for the first time, the software finally feels up to the task. Microsoft’s aggressive push into “Physical AI” isn’t just another R&D project; it’s a clear shot across the bow. The race to build the brain for the next generation of machines has truly begun—and this time, Microsoft is playing for keeps.