In a move so audacious it practically screams “sci-fi blockbuster,” NVIDIA is throwing its considerable weight behind Starcloud, a startup with the frankly cosmic ambition of relocating data centres to orbit. This Redmond-based outfit, a shining alumnus of Nvidia’s Inception programme, boldly claims its celestial solution will slash energy costs by up to a factor of ten compared to their Earth-bound brethren. The grand plan? To launch a satellite, roughly the size of your average kitchen fridge, dubbed Starcloud-1, this November. Its payload? The inaugural cosmic deployment of an NVIDIA H100 GPU—a chip far more accustomed to the pampered confines of air-conditioned server rooms than the stark, unforgiving vacuum of space.

Starcloud’s audacious pitch hinges on two utterly fundamental principles of the cosmos: an inexhaustible supply of solar power and a literally universe-sized heat sink. By operating in orbit, these orbital data centres would enjoy perpetual access to solar energy, utterly negating the need for grid power or the tiresome faff of backup batteries. Even more critically, they’d leverage the near-absolute zero temperature of space for passive cooling, simply radiating heat away without the colossal thirst of the millions of tonnes of water devoured by terrestrial facilities. It’s a rather elegant solution, provided, of course, you’re willing to overlook the monumental cost and mind-boggling complexity of launching and meticulously maintaining high-performance electronics beyond the comforting embrace of Earth’s atmosphere.

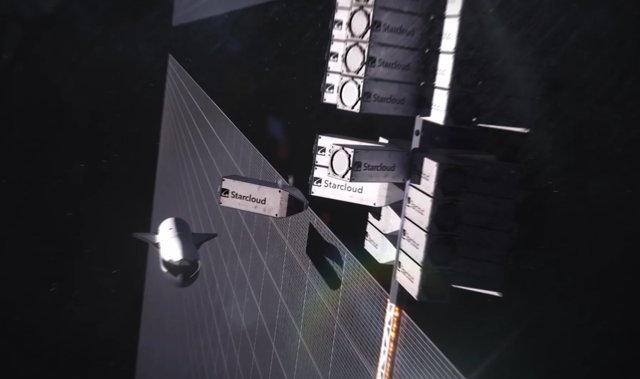

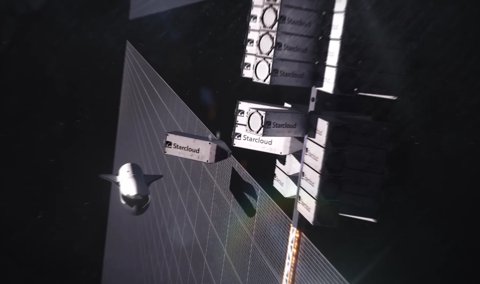

The long-term vision is even more dizzyingly ambitious, sketching out plans for a 5-gigawatt orbital data centre featuring solar and cooling panels that would stretch approximately 4 kilometres in both width and length. While this initial launch serves as a demonstrator, Starcloud’s CEO, Philip Johnston, is already making a rather bold prediction: “in 10 years, nearly all new data centres will be built in outer space.” This vision, he contends, is fuelled by rapidly plummeting launch costs and the insatiable, ever-growing energy demands of AI, which are projected to cause global data centre electricity consumption to more than double by 2030.

Why this matters more than your morning brew

The explosive, relentless growth of AI isn’t just creating wonders; it’s brewing an energy consumption crisis of truly epic proportions. Terrestrial data centres already gulp down a staggering 1-1.5% of global electricity use, a figure that’s poised to rocket into the stratosphere. Starcloud’s plan, while undeniably astronomically ambitious, represents a genuinely serious attempt to tackle a problem of planet-sized dimensions. By shunting the energy-intensive core of AI infrastructure off-world, it could, in theory, finally decouple the relentless march of AI progress from Earth’s increasingly strained energy and water resources. It’s a high-stakes gamble, pitting the accelerating economics of space launch against the ever-mounting environmental cost of computation here on good old Terra Firma.