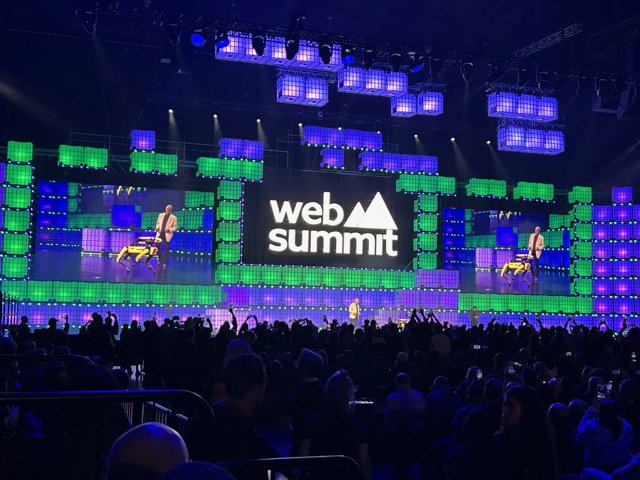

Boston Dynamics, those maestros of mechanical marvels whose YouTube antics regularly break the internet with their eerily agile robots, are now focusing their considerable talents on what’s buzzing inside their metallic heads. At the recent Web Summit in Lisbon, CEO Robert Playter pulled back the curtain on a future where their machines won’t just follow orders, but actually understand them, responding to natural language and gestures. This, my friends, is a cracking leap beyond the old ‘point and click’ school of robot wrangling.

Imagine: instead of painstakingly programming every twitch, you’ll simply tell a bot what you fancy it to do. It’s less ‘how to dance the cha-cha’ and more ‘get on with it, old chap!’

This shiny new AI-infused strategy is all about transforming these whirring wonders from obedient automata into genuinely intuitive partners. Take Spot, the nimble quadruped whose industrial ballet already sees it trotting through factories, diligently performing thermal inspections and squinting at gauges. Soon, an operator could just casually ask it to ‘have a gander at the pressure valve on unit three,’ rather than inputting a complex sequence of commands. It’s a proper game-changer, slashing the technical hurdles for deploying these bots in environments as varied as bustling manufacturing floors and, rather more crucially, nuclear facilities where a misplaced decimal point could be a tad problematic. Turns out, they’ve been busy under the bonnet, exploring the wizardry of Large Language Models (LLMs) to imbue their metallic mates with a dose of good old common sense and the smarts to respond to a simple ‘fetch’ or ‘stand by, old bean.’

And this brainy software push isn’t just for Spot; it’s a full-frontal assault on robotic dullness across their entire mechanical menagerie. Stretch, their colossal warehouse workhorse, already shifts millions of boxes a year (think of it as a particularly muscular, articulated ballet dancer). It’ll soon be even more chuffed, benefiting from AI that lets it adapt with the agility of a squirrel to the glorious chaos inside a shipping container – no more fumbling about like a lost tourist. Meanwhile, the bipedal Atlas, still the pin-up boy for general-purpose robotics research, continues its gravity-defying antics. Recent breakthroughs involve Large Behavior Models (LBMs) that empower this humanoid to tackle complex, multi-step tasks from mere high-level language prompts. Basically, Atlas is learning to ‘get a move on and sort that lot out’ rather than needing a step-by-step instruction manual.

Why This Is a Rather Big Deal

Boston Dynamics is, quite frankly, making a rather crucial pivot. They’re moving beyond simply showcasing robots that can perform parkour with alarming grace, and instead, they’re putting serious emphasis on what’s bubbling away in their digital brains. By seamlessly integrating advanced AI for natural language and gesture control, the company is aiming to make its metallic workforce as approachable as your favourite barista – even for those of us who couldn’t code our way out of a paper bag.

This grand shift from arcane programming incantations to a simple chinwag with your robot represents a genuine “paradigm shift.” It could dramatically accelerate the widespread adoption of mobile robots, not just in factories and commercial hubs, but, dare we dream, eventually in our own humble abodes. Imagine asking your robot to put the kettle on! It’s no longer merely about a robot that can pull off a jaw-dropping backflip; it’s about a robot that genuinely grasps what you’re after next. The future, it seems, is less about spectacle and more about savvy.