In a rather smashing move for the robotics world, the Beijing Innovation Center of Humanoid Robotics (X-Humanoid) has officially pulled a rather impressive rabbit out of its hat by open-sourcing its XR-1 model. And let’s be clear, this isn’t just another algorithm lobbed onto GitHub like yesterday’s chip wrappers; XR-1 is the first Vision-Language-Action (VLA) model to actually pass muster with China’s national embodied AI standards. That’s a benchmark designed to boot robots from being mere lab curiosities into proper, functional machines. The grand unveiling includes the XR-1 model itself, the comprehensive RoboMIND 2.0 dataset, and ArtVIP, a library of rather high-fidelity digital assets for simulation.

At its very core, XR-1 is engineered to absolutely smash the “perception-action” barrier that keeps most robots looking like they’ve just woken up from a particularly confused nap. It employs what its creators have rather cleverly dubbed Unified Vision-Motion Codes (UVMC), a technique that essentially teaches a robot’s eyes and its limbs to speak the same language. This allows for genuinely “instinctive” reactions – think halting a pour quicker than you can say ‘spilled tea’ if someone’s mug suddenly does a runner. Thanks to a three-stage training process, the model can generalise its skills across wildly different robot bodies, from a dinky dual-armed Franka system to X-Humanoid’s own beefy Tien Kung series of humanoids.

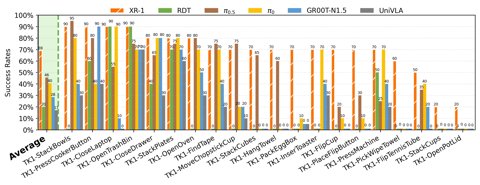

And this isn’t just a load of old cobblers, either. X-Humanoid has demonstrated XR-1 effortlessly navigating through five different types of doors (because who hasn’t struggled with a tricky door?), performing spot-on industrial sorting, and even shifting some serious weight in Cummins factories. Backing this up is the RoboMIND 2.0 dataset, which now contains over 300,000 task trajectories, and the ArtVIP digital twin assets. The team reckons that chucking this simulation data into the training mix can boost real-world task success rates by more than 25%. That’s not to be sniffed at.

Why is this important?

So, why should you give a flying fig about this, beyond the sheer technical wizardry? By open-sourcing not just a model but the whole blooming caboodle – an entire ecosystem of data and simulation assets – X-Humanoid is playing a rather shrewd hand to standardise the development of proper, practical, autonomous robots. Releasing the first nationally-certified embodied AI model for free is a direct attempt to build a foundational platform, potentially lowering the barrier to entry and accelerating the entire field. It signals a strategic effort to move beyond those often-siloed academic projects and create a common ground for building robots that can actually, finally, pull their weight and get the job done.